Heterogeneous Graph Neural Network

A graphical model is a representation that uses nodes to represent entities and edges to represent relations. A heterogeneous graph is one having different classes of nodes (each node is a member of a class). Graphs can be used to represent a wide range of otherwise difficult problems including path planning, genetic inheritance, social networking, and many others.

In this project we use PyTorch Geometric (PyG) to investigate how the topics on Twitter evolve over time. Specifically we study tweet threads relating to COVID-19 in the early months of 2020. Starting from raw tweets we build a heterogeneous graph with the goal of predicting if a tweet will be popular (receive a lot of re-tweets) or not. We use a pre-trained BERT language model for embeddings, PyG, and HeteroConv from Torch. Overview is github.com/cwinsor/uml_twitter/blob/main/Instructions.pdf

Markov Logic Network and the Alchemy Toolset

https://github.com/cwinsor/alchemy_1_cwinsor/blob/main/markov_logic_networks_intro.pdf

Markov Logic Network (MLN) is a new and exciting technique for modeling systems that have structure but are inherently probabilistic. Structure is expressed using first order logic with techniques from Markov Networks used to capture uncertain relationships. A MLN allows deductive reasoning and probabilistic inference. The research is by Pedro Domingos and Matt Richardson at University of Washington.

Alchemy is toolset for researchers to study and implement Markov Logic Networks. Application examples come with the code and cover social networking, logistic regression, text classification, entity resolution, hidden Markov models, natural language processing, Bayesian networks.

MLN is a new and exciting approach to modeling structured-yet-uncertain systems. Alchemy paves the way for research and implementation.

Whole-Image Classification using Kiras/Tensorflow

This project explores Convolutional Neural Networks and the Keras/Tensorflow library. The application is whole-image classification. Data is real-world and classification is done in real time.

The task is development of a self-driving (toy) car. The CNN is taught to steer by classifying images from an on-board camera into left/right/center steering values.

The project developed in conjunction with the MetroWest Boston Developers Machine Learning Group from Framingham MA.

Tri-Training

We review the 2005 paper by Z. Zhou and M. Lee that introduces Tri-Training. This .pdf captures the details.

Tri-training is a technique to use unlabeled data to train a supervised classifier. This is important because it can be hard to get the large quantities of labeled data that is needed. Unlabeled data is much easier to come by but cannot be directly used. Tri-Training bridges the gap allowing supervised models like CNN, Bayes, and decision trees to be trained using unlabeled data.

OpenCV Machine Vision

The OpenCV machine vision library is a resource that is rich, deep and broad. It includes vision-specific core elements but also more broadly applicable algorithms such as machine learning and makes these available with Java, C++ and Python interfaces. In this project we start with the Calib3c library to show how a pinhole camera model can be applied to the task of identifying a Backgammon board, arbitrarily positioned and seen in perspective.

Industrial Internet-of-Things / Connected Robotics

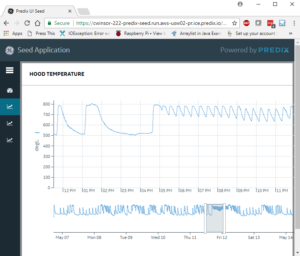

This sensor-to-cloud application applies Predix Cloud Foundry and GE Predix “best practice” technologies to capture light, temperature and sound from an oil-fired burner for presentation and analysis on a cloud-based web dashboard. The comprehensive system leverages UAA, REST, Node.js/Express for the cloud application environment. Interconnect is based on MQTT, websocket, OSGI. A BCM2836 (RaspberryPi) is the edge device for sensor-based collection. A set of Linux scripts automate the build/deploy process. This same technology can be applied to autonomous/connected robots.

Machine Learning

Machine Learning is an automated process that captures the relationship between independent (attribute) and target (class) variables. The result is a model that can be applied for prediction and analysis. Many ML techniques exist and each is more (or less) suited for particular applications and data sets. The following papers investigate common ML techniques and their characteristics.

- Summary Comparison

- Rule-based Learning

- Genetic Algorithms

- Instance-based Learning

- Bayesian Networks

- Neural Networks

- Decision Trees

- Preprocessing and Attribute Selection

Probabilistic Graphical Models

Probabilistic Graphical Models are a means to represent information and structure in the face of uncertainty. Stanford University researcher Dr. Daphne Koller and Google/Baidu architect Andrew Ng are leaders in the field and provided a thorough introduction on Coursera.

T-Tests (statistical significance)

T-Tests are a scientifically accepted way to assign statistical significance to experiments. For example:

* Show machine learning algorithm “X” is superior to “Y”

* Show Version 2.0 of algorithm produces same results as Version 1.0

* Show a sensor embedded in a robotic system performs at the same level as one in a (vendor-calibrated) bench environment.

References and Python code in the notebooks HERE.

The Bootstrap

The Bootstrap is “a computer-based method for assigning measures of accuracy to statistical estimates.” In essence – it is a computer-based approach to establishing standard error of a model that is based on an empirical sample. It allows establishing significance to experimental results, and gives a straightforward, step-by-step approach that can be applied to a wide variety of situations, including complicated distributions and complicated measures.

The git HERE includes python code that implements Bootstrap. The examples are taken from the text.

Speech-based Command and Control

Spoken language is increasingly being used as the primary user interface for devices in both consumer and industrial settings. The challenges of limited vocabulary, noise susceptibility, high computational cost, and speaker-specific training are being overcome. In this paper we investigate approaches for learning dialect in a speech-based command-and-control interface.

Linux Kernel Realtime Support

This study investigates features in the Linux kernel that reduce variability in interrupt latency which can allow multitasking in even intensive realtime applications. Specifically, the paper details the CCONFIG_PREEMPT optimization and the PREEMPT_RT patch.